Background: In-efficiencies associated with patient recruitment for Lupus trials can lead to prolonged trial durations, increased costs, and delays in bringing effective treatments to market. By applying Bayesian optimization methods in trial design, we are able to design response-adaptive trials that optimize patients’ allocation to more promising arms. We retrospectively analyzed a failed phase 2 randomized control trial of a Lupus therapeutic agent.

Objectives: Based on the potential features accounting for heterogeneity in response to treatment in this trial, we aim to use our interactive adaptive-trial AI simulation tool to simulate a clinical trial run on a highly responsive sub-population.

Methods: To demonstrate the potential for increased efficiency leveraging the tool, and as part of a retrospective analysis of a failed phase 2 randomized control trial with 3 treatment arms and a standard of care control, we implemented our proprietary algorithms incorporating Bayesian optimization tools. In consideration of the uncertainty when predicting arm efficacy during trial design, we ran (i) a retrospective analysis of the failed phase 2 study, which revealed a multitude of factors, as potential predictors of response to treatment and (ii) a sensitivity analysis on two scenarios to ensure robustness of the adaptive design. Scenario 1: only one arm is efficacious; Scenario 2: two arms are efficacious. In both scenarios effectiveness was defined as a ~40% response rate and the control arm response rate was set at ~25% as seen in the original failed trial. Type 1 error (one sided) was set to α=0.05. Power was evaluated in the range of 75-90%. A response adaptive randomization with a single interim, set to occur after approximately 30% of subjects had reached the measured outcome (‘adaptive design’), was simulated 100,000 times and compared to a fixed trial design. Validation was performed using multiple point sensitivity analyses.

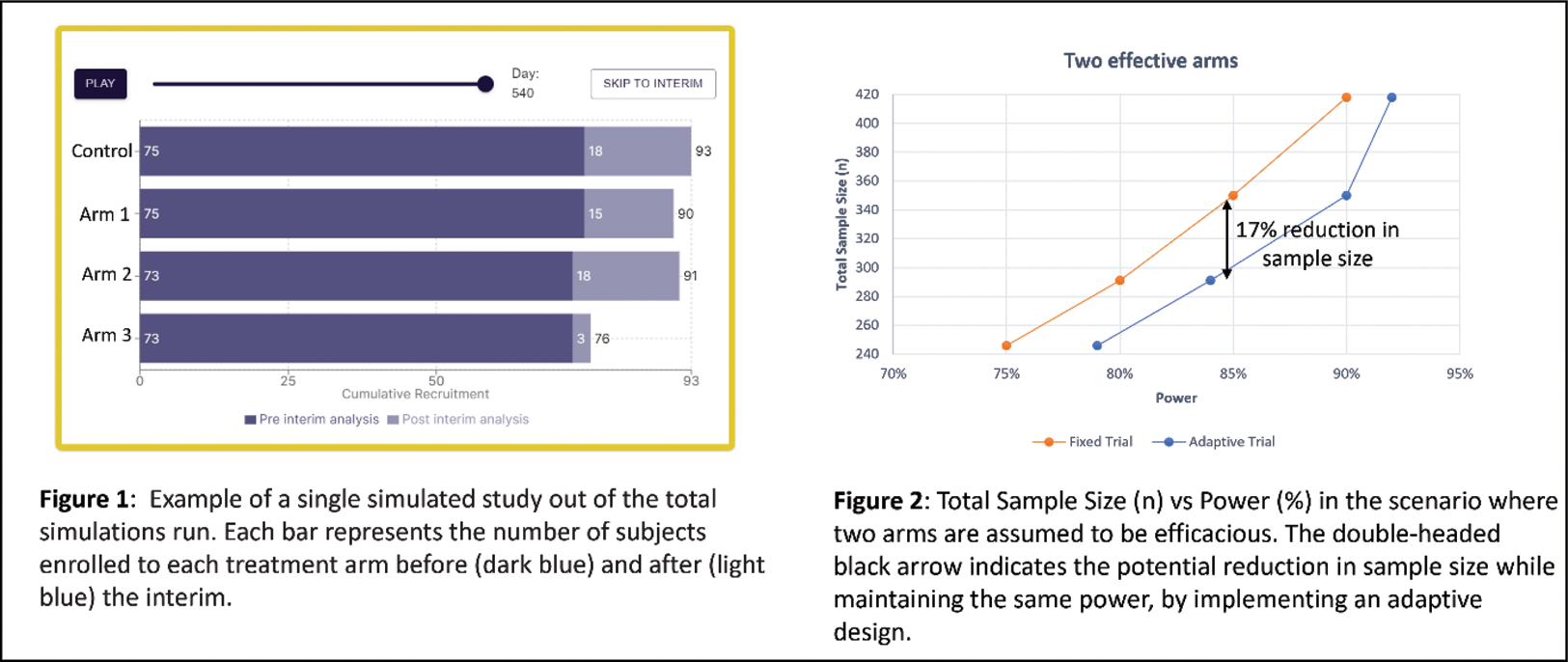

Results: At 85% power, the adaptive design as compared with the fixed design, was able to achieve potential reductions in sample size estimated at 17% and 15% for the two efficacious arms (291 vs 350 subjects, Figure 2) and one efficacious arm (370 vs 438 subjects) scenarios, respectively. Figure 1 presents a single simulation run of the adaptive design from the interactive adaptive-trial AI simulation tool. Two hundred and ninety-six subjects were recruited at a 1:1 allocation ratio prior to the interim analysis (of which at least 122 subjects reached the outcome measure), which after analysis that revealed two efficacious arms, diverted patients recruited post-interim mainly to those arms, enabling the trial to achieve significance with a smaller average total sample size (291 vs 350 subjects, as mentioned above).

Conclusion: By implementing an innovative approach and using an interactive adaptive-trial AI simulation tool to simulate and visualize trial design, a potential reduction in sample size and increase in the proportion of Lupus patients allocated to efficacious treatment arms was achieved based on select features explaining heterogeneity in subsets of patients. Further evaluation of additional case studies is on-going.

REFERENCES: NIL.

Acknowledgements: NIL.

Disclosure of Interests: Tahel Ilan Ber: None declared, Dan Goldstaub Merck-MSD, Teva, Oshri Machluf: None declared, Neta Shanwetter Levit: None declared, Roni Cohen: None declared, Yaron Racah: None declared, Elad Berkman: None declared, Raviv Pryluk: None declared, Murray B Urowitz PhaseV Trials Inc.