Background: The EULAR 2024-2029 European Manifesto [1] highlights a critical healthcare challenge: shortages in the rheumatology workforce threaten care provision for 120 million Europeans with rheumatic diseases (RD), which account for 10-20% of primary care consultations. Due to rheumatologist shortages and musculoskeletal disease burden in primary care, there is an urgent need for tools that can both identify potential RD cases and confidently rule out diseases. Early identification enables targeted prevention and treatment, while reliable disease exclusion can reduce unnecessary specialist referrals, optimizing limited rheumatology resources. The digital symptom checker Rheumatic? [2] was developed to address these complementary needs.

Objectives: Based on Rheumatic? responses, we developed models for immune-mediated rheumatic diseases (IRD), osteoarthritis (OA), and fibromyalgia (FM) to:

i) confirm received diagnoses.

ii) predict new diagnoses within one year.

Methods: Rheumatic? is a questionnaire consisting of 12 main questions and up to 64 optional sub questions. We conducted a prospective study recruiting participants online, at primary care clinics, and at rheumatology clinics. Participants completed Rheumatic? and were followed by email-based data collection at 3, 6 and 12 months to document subsequently given diagnoses. We validated the reliability of self-reported diagnoses by physician verification in a hospital subset. We used two datasets: I) for model development containing patients recruited between June 2021 – Feb 2023, and II) for external validation containing patients recruited between Feb 2023 – June 2023. For predicting novel diagnoses, we excluded participants who reported at baseline to have already been diagnosed with the to-be-predicted-diagnosis.

We developed models in three steps following the best practices for model validation [3, 4]:

Method development on dataset I

Training and method selection on a 66.7% split, to select the final method and create the first model

Internal validation on the remaining 33.3% of the model developed in 1a. This step demonstrates the internal, unbiased performance of the first model

Assess stability using the complete dataset I by iteratively emulating the original split (5 times).

Final model building

Training the final model on the complete dataset I

Assess generalizability in the external validation using dataset II with patients recruited at a later time.

We employed two methodological approaches:

Logistic regression, using self-reported symptoms selected by a specialist

Ensemble of AdaBoost learners

The models were evaluated using AUC-ROC, calibration slope (linear regression without intercept), and decision curve analysis (Net Benefit = True Positives/N - (False Positives/N) × (Threshold Probability/(1- Threshold Probability)).

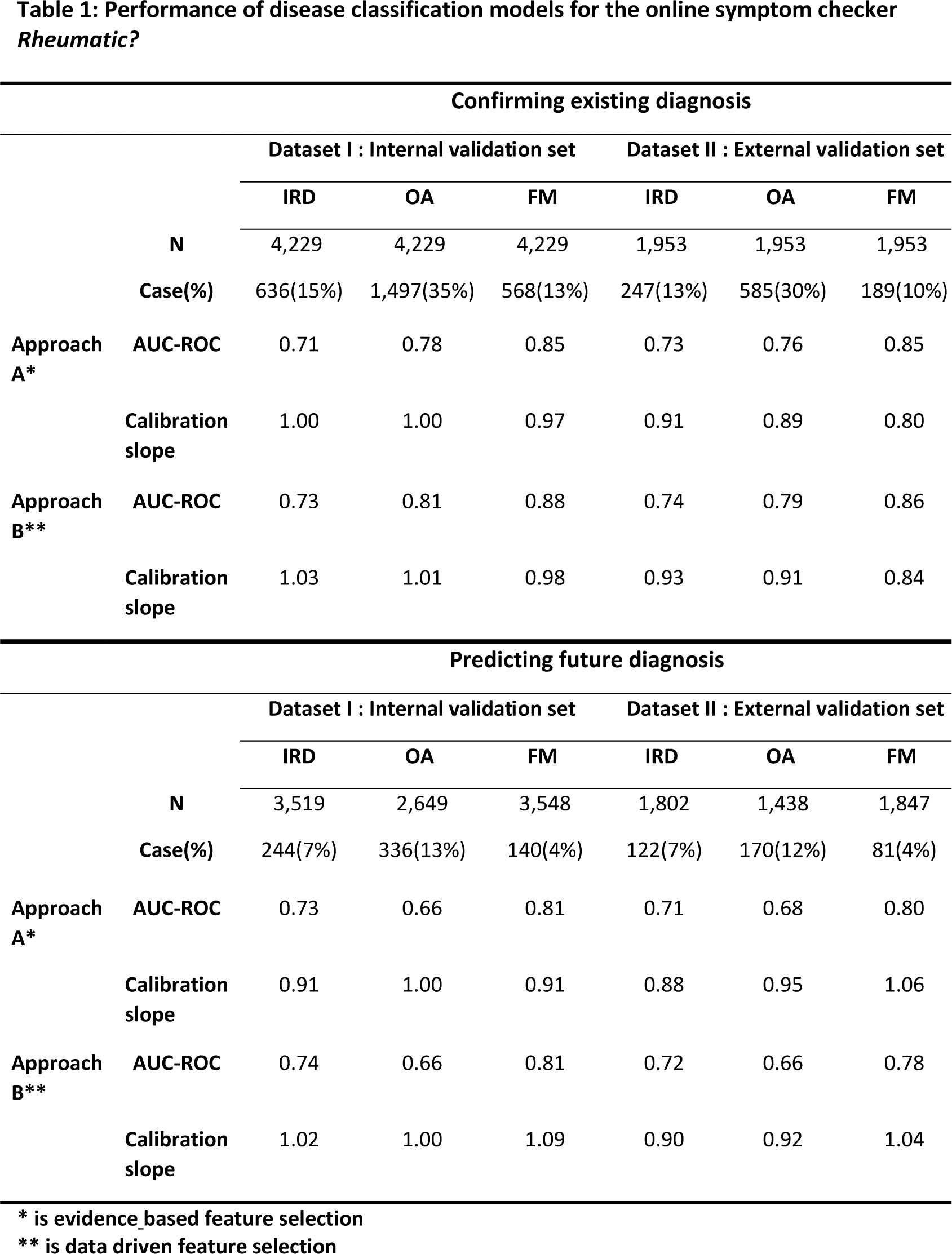

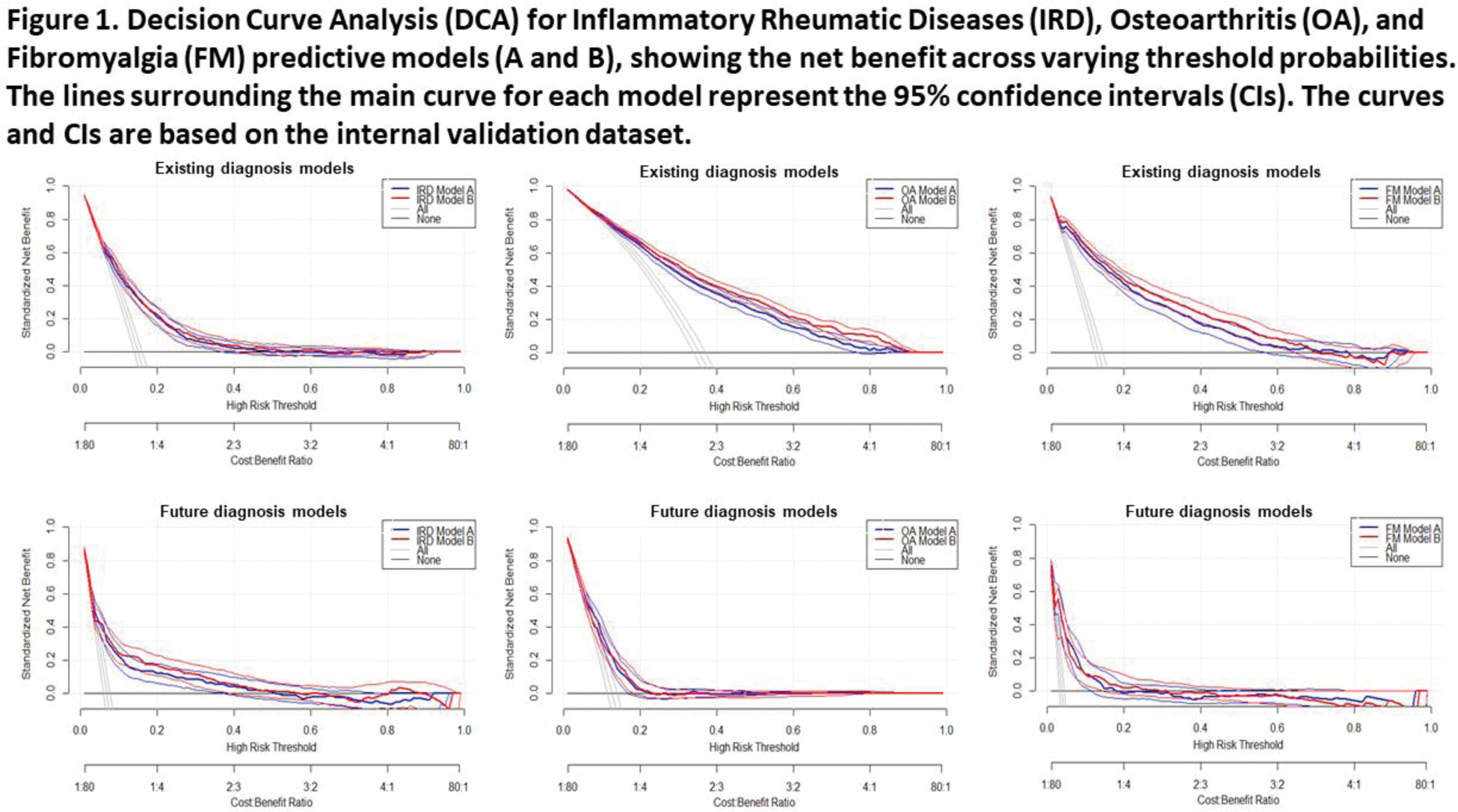

Results: A total of 37,555 participants were recruited for dataset I. Among them, 12,700 completed the initial survey on current diagnoses and 10,581 completed at least one follow-up survey on novel diagnoses. For the model confirming current diagnoses, dataset II contained 1,953 participants. For the model predicting diagnoses within the next year, the external validation set included 1,802 participants for IRD, 1,438 for OA, and 1,847 for FM. The reliability of self-reported diagnoses was confirmed by examining hospital medical records of 310 patients, showing high concordance rates: 89% for IRD, 89% for OA, and 94% for FM. During training on the 66.7% split of dataset I, the selected method for approach A was logistic regression using 24 key features, while for approach B, an ensemble of AdaBoost learners was employed. Both approaches demonstrated stable performance, with a maximum difference in AUC-ROC between folds of 0.06. The performance of all models was robust in both internal and external validation (Table 1). For confirmation of received diagnoses at baseline, both approaches showed similar performances. The AUC-ROC in external validation of approach A & B were respectively 0.73 & 0.74 for IRD, 0.76 & 0.79 for OA, and 0.85 & 0.86 for FM. Calibration slopes were around 0.90 for IRD and OA, and 0.84 for FM. For prediction of new diagnoses , the AUC-ROC in external validation of approach A vs B were respectively 0.71 & 0.72 for IRD, 0.68 & 0.66 for OA, and 0.80 & 0.78 for FM. Calibration slopes again showed good alignment between predicted and observed outcomes for all three disease categories. Subset analyses conducted in both internal and external datasets showed consistent results across sexes and recruitment sources. Decision Curve Analysis (Figure 1) demonstrated positive net benefit across various threshold probabilities for all models. For future diagnosis prediction of IRD, OA and FM particularly lower disease probabilities showed good net benefit, meaning the algorithms effectively ruling out diseases. For OA or FM diagnosis confirmation, higher probabilities also showed good net benefit, meaning the algorithms can confirm a previous diagnosis.

Conclusion: Our algorithms for Rheumatic? provide clinicians with a validated digital tool for assessing rheumatic diseases. The algorithms are stable, generalizable and show particular strength in ruling out IRD, potentially reducing unnecessary specialist referrals. For current diagnoses, high performance in confirming FM and OA supports their use in diagnostic validation. Implementation could streamline patient triage in primary care while ensuring appropriate specialist referrals, addressing both quality of care and workforce challenges. Further study will focus on generalizability to different European settings.

REFERENCES: [1]

[2] Doi: 10.2196/17507.

[3] Doi: 10.1136/bmj-2023-078276.

[4] Doi: 10.1136/bmj-2023-074819.

Acknowledgements: This study was supported by Horizon-EU within the SPIDERR-project (activity No. 101080711), and the DigiPrevent programme (funded in April 2022) from EIT Health. The project has further received funding by the Dutch Organization for Health Research and Development (ZonMw) via the Klinische Fellow No. 40-00703-97-19069, and Open Competitie, No. 09120012110075.

Disclosure of Interests: None declared.

© The Authors 2025. This abstract is an open access article published in Annals of Rheumatic Diseases under the CC BY-NC-ND license (